Memory Ordering in Rust: A Guide to Safe Concurrency

Lukas Schneider

DevOps Engineer · Leapcell

In concurrent programming, correctly managing the order of memory operations is key to ensuring program correctness. Rust provides atomic operations and the Ordering enumeration, allowing developers to safely and efficiently manipulate shared data in a multithreaded environment. This article aims to provide a detailed introduction to the principles and usage of Ordering in Rust, helping developers better understand and utilize this powerful tool.

Fundamentals of Memory Ordering

Modern processors and compilers reorder instructions and memory operations to optimize performance. While this reordering usually does not cause issues in single-threaded programs, it can lead to data races and inconsistent states in a multithreaded environment if not properly controlled. To address this issue, the concept of memory ordering was introduced, allowing developers to specify memory ordering for atomic operations to ensure correct synchronization of memory access in concurrent environments.

The Ordering Enumeration in Rust

The Ordering enumeration in Rust's standard library provides different levels of memory order guarantees, allowing developers to choose an appropriate ordering model based on specific needs. The following are the available memory ordering options in Rust:

Relaxed

Relaxed provides the most basic guarantee—it ensures the atomicity of a single atomic operation but does not guarantee the order of operations. This is suitable for simple counting or state marking, where the relative order of operations does not affect the correctness of the program.

Acquire and Release

Acquire and Release control the partial ordering of operations. Acquire ensures that the current thread sees the modifications made by a matching Release operation before executing subsequent operations. These are commonly used to implement locks and other synchronization primitives, ensuring that resources are properly initialized before access.

AcqRel

AcqRel combines the effects of Acquire and Release, making it suitable for operations that both read and modify values, ensuring that these operations are ordered relative to other threads.

SeqCst

SeqCst, or sequential consistency, provides the strongest ordering guarantee. It ensures that all threads see operations in the same order, making it suitable for scenarios that require a globally consistent execution order.

Practical Usage of Ordering

Choosing the appropriate Ordering is crucial. An overly relaxed ordering may lead to logical errors in the program, while an overly strict ordering may unnecessarily reduce performance. Below are several Rust code examples demonstrating the use of Ordering.

Example 1: Using Relaxed for Ordered Access in a Multithreaded Environment

This example demonstrates how to use Relaxed ordering in a multithreaded environment for a simple counting operation.

use std::sync::atomic::{AtomicUsize, Ordering}; use std::thread; let counter = AtomicUsize::new(0); thread::spawn(move || { counter.fetch_add(1, Ordering::Relaxed); }).join().unwrap(); println!("Counter: {}", counter.load(Ordering::Relaxed));

- Here, an atomic counter

counterof typeAtomicUsizeis created and initialized to 0. - A new thread is spawned using

thread::spawn, in which thefetch_addoperation is performed on the counter, incrementing its value by 1. Ordering::Relaxedensures that the increment operation is atomically performed, but it does not guarantee the order of operations. This means that if multiple threads performfetch_addoncounterconcurrently, all operations will be safely completed, but their execution order will be unpredictable.Relaxedis suitable for simple counting scenarios where we only care about the final count rather than the specific order of operations.

Example 2: Using Acquire and Release to Synchronize Data Access

This example demonstrates how to use Acquire and Release to synchronize data access between two threads.

use std::sync::{Arc, atomic::{AtomicBool, Ordering}}; use std::thread; let data_ready = Arc::new(AtomicBool::new(false)); let data_ready_clone = Arc::clone(&data_ready); // Producer thread thread::spawn(move || { // Prepare data // ... data_ready_clone.store(true, Ordering::Release); }); // Consumer thread thread::spawn(move || { while !data_ready.load(Ordering::Acquire) { // Wait until data is ready } // Safe to access the data prepared by producer });

- Here, an

AtomicBoolflagdata_readyis created to indicate whether the data is ready, initialized tofalse. Arcis used to sharedata_readysafely among multiple threads.- The producer thread prepares data and then updates

data_readytotrueusing thestoremethod withOrdering::Release, indicating that the data is ready. - The consumer thread continuously checks

data_readyusing theloadmethod withOrdering::Acquirein a loop until its value becomestrue.- Here,

AcquireandReleaseare used together to ensure that all operations performed by the producer before settingdata_readytotrueare visible to the consumer thread before it proceeds to access the prepared data.

- Here,

Example 3: Using AcqRel for Read-Modify-Write Operations

This example demonstrates how to use AcqRel to ensure correct synchronization during a read-modify-write operation.

use std::sync::{Arc, atomic::{AtomicUsize, Ordering}}; use std::thread; let some_value = Arc::new(AtomicUsize::new(0)); let some_value_clone = Arc::clone(&some_value); // Modification thread thread::spawn(move || { // Here, `fetch_add` both reads and modifies the value, so `AcqRel` is used some_value_clone.fetch_add(1, Ordering::AcqRel); }).join().unwrap(); println!("some_value: {}", some_value.load(Ordering::SeqCst));

AcqRelis a combination ofAcquireandRelease, suitable for operations that both read (acquire) and modify (release) data.- In this example,

fetch_addis a read-modify-write (RMW) operation. It first reads the current value ofsome_value, then increments it by 1, and finally writes back the new value. This operation ensures:- The read value is the latest one, meaning all prior modifications (possibly made in other threads) are visible to the current thread (Acquire semantics).

- The modification to

some_valueis immediately visible to other threads (Release semantics).

- Using

AcqRelensures that:- Any read or write operations before

fetch_addwill not be reordered after it. - Any read or write operations after

fetch_addwill not be reordered before it. - This guarantees correct synchronization when modifying

some_value.

- Any read or write operations before

Example 4: Using SeqCst to Ensure Global Ordering

This example demonstrates how to use SeqCst to ensure a globally consistent order of operations.

use std::sync::atomic::{AtomicUsize, Ordering}; use std::thread; let counter = AtomicUsize::new(0); thread::spawn(move || { counter.fetch_add(1, Ordering::SeqCst); }).join().unwrap(); println!("Counter: {}", counter.load(Ordering::SeqCst));

- Similar to Example 1, this also performs an atomic increment operation on a counter.

- The difference is that

Ordering::SeqCstis used here.SeqCstis the strictest memory ordering, ensuring not only the atomicity of individual operations but also a globally consistent execution order. SeqCstshould be used only when strong consistency is required, such as:- Time synchronization,

- Synchronization in multiplayer games,

- State machine synchronization, etc.

- When using

SeqCst, allSeqCstoperations across all threads appear to be executed in a single, globally agreed order. This is useful in scenarios where the exact sequence of operations needs to be maintained.

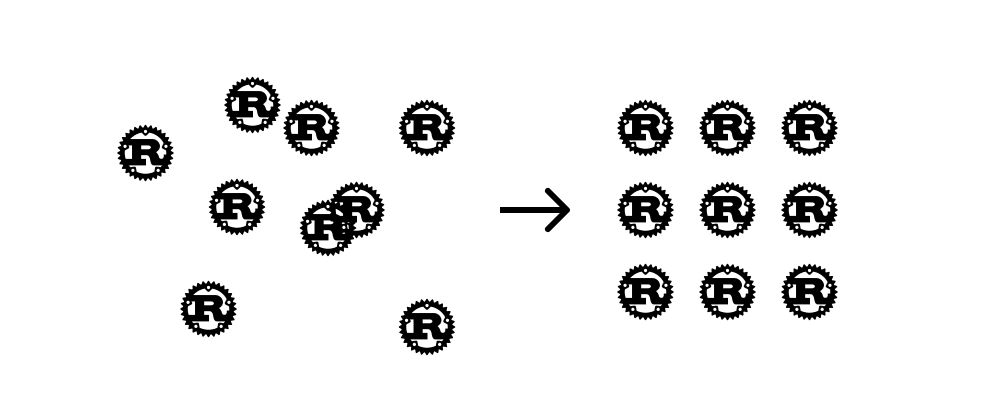

We are Leapcell, your top choice for hosting Rust projects.

Leapcell is the Next-Gen Serverless Platform for Web Hosting, Async Tasks, and Redis:

Multi-Language Support

- Develop with Node.js, Python, Go, or Rust.

Deploy unlimited projects for free

- pay only for usage — no requests, no charges.

Unbeatable Cost Efficiency

- Pay-as-you-go with no idle charges.

- Example: $25 supports 6.94M requests at a 60ms average response time.

Streamlined Developer Experience

- Intuitive UI for effortless setup.

- Fully automated CI/CD pipelines and GitOps integration.

- Real-time metrics and logging for actionable insights.

Effortless Scalability and High Performance

- Auto-scaling to handle high concurrency with ease.

- Zero operational overhead — just focus on building.

Explore more in the Documentation!

Follow us on X: @LeapcellHQ